sanity checks compression algorithm snappy previously failed test|解决HBase中snappy出错 : importing I'm working with a fresh install of HBase 2.1.4 w/ Hadoop 3.1.2 on RHEL 7.6. In HBase shell, I can create a non-compressed table no problem but this command: create . WinRAR is a powerful archiver extractor tool, and can open all popular file formats. RAR and WinRAR are Windows 11™ and Windows 10™ compatible; available in over 50 languages and in both 32-bit and 64-bit; compatible with several operating systems (OS), and it is the only compression software that can work with Unicode.

{plog:ftitle_list}

It's Okay Because It's Family Mangá Hentai Online grátis - H.

I have been trying to resolve a wired issue of HBase related to Snappy Compression. Following is the detailed description of everything related to this issue: hbase . I'm working with a fresh install of HBase 2.1.4 w/ Hadoop 3.1.2 on RHEL 7.6. In HBase shell, I can create a non-compressed table no problem but this command: create .IOException: Compression algorithm 'snappy' previously failed test. 根据官方教程再做一遍:http://hbase.apache.org/book/snappy.compression.html. 还是报错,后来发现差异。. 服 . What this means is that our TestCompressionTest passes for hadoop before 3.3.1 but at 3.3.1, the assert that SNAPPY and LZ4 compressors should fail – because no lib .

I need to change the compression of a table from Snappy to LZO. I tried the following but doesn't work. create table with SNAPPY compression. disable and then alter . Use CompressionTest to verify snappy support is enabled and the libs can be loaded ON ALL NODES of your cluster: $ hbase .SNAPPY is not suitable for begginer. They may get exceptions like Caused by: org.apache.hadoop.hbase.ipc.RemoteWithExtrasException(org.apache.hadoop.hbase.DoNotRetryIOException): . One of the features of HBase is to enable different types of compression for a column family. It is recommended that testing be done for your use case, but this blog shows how Snappy compression can reduce .

alter 'SNAPPY_TABLE', {NAME=>'cf1',COMPRESSION=>'snappy'} enable 'SNAPPY_TABLE'. However did you find a way how to compress existing data, as only new . 其实很久没有写博客了,因为在database的学习过程中遇到了一些问题,并且本人容易忘事,所以记下来。HBase在spring-boot框架下运行时,出现了一个一问题: org.apache.hadoop.hbase.DoNotRetryIOException: java.lang.IllegalAccessError: tried to access method com.google.commo A sanity test or sanity check is a basic test to quickly evaluate whether a claim or the result of a calculation can possibly be . it fails the basis of a sanity check - that if the logic is correct, the test cannot fail, whereas the logic of TCP is correct, yet pings can still fail. – Pete . much less the algorithm itself. Share. Improve . I need to change the compression of a table from Snappy to LZO. I tried the following but doesn't work. create table with SNAPPY compression. disable and then alter table with LZO compression; Enabled and did major compact on the table; I found the .regioninfo files still have COMPRESSION => 'SNAPPY'. Could anyone help?

ERROR: org.apache.hadoop.hbase.DoNotRetryIOException: Compression algorithm 'snappy' previously failed test. Set hbase.table.sanity.checks to false at conf or table descriptor if you want to bypass sanity checks如果说不支持SNAPPY算法,会提示下面的错误,但是创建的时候有没有这异常。 ERROR: org.apache.hadoop.hbase.DoNotRetryIOException: Compression algorithm 'lzo' previously failed test. Snappy is an easy-to-understand compression algorithm that focuses on compression speed rather than compression ratio, meaning it trades speed for efficacy. This is especially helpful in projects like databases where data transmission is the bottleneck rather than how much data a server farm can store.

分布式消息队列是互联网领域广泛应用的中间件,在上一课中,我已经介绍了基于 Kafka、ZooKeeper 的分布式消息队列系统的搭建步骤,以及 Java 客户端的使用方法。对于商业级消息中间件来说,可靠性至关重要,那么,Kafka 是如何确保消息生产、传输、存储及消费过程中的可靠性的呢?

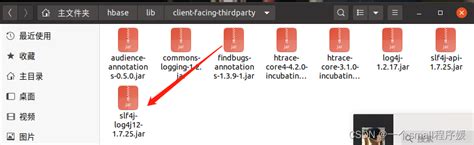

解决HBase中snappy出错

Overview. Apache HBase provides the ability to perform realtime random read/write access to large datasets. HBase is built on top of Apache Hadoop and can scale to billions of rows and millions of columns. One of the features of HBase is to enable different types of compression for a column family. It is recommended that testing be done for your use case, .Snappy (previously known as Zippy) is a fast data compression and decompression library written in C++ by Google based on ideas from LZ77 and open-sourced in 2011. [3] [4] It does not aim for maximum compression, or compatibility with any other compression library; instead, it aims for very high speeds and reasonable compression.Compression speed is 250 MB/s . 文章浏览阅读3.3k次。在尝试使用HBase创建表时,遇到了'Compression algorithm 'lzo' previously failed test.'的错误。这通常是由于系统不支持或未正确配置lzo压缩算法导致的。为解决此问题,可以将hbase.table.sanity.checks配置设置为false,以跳过健康检查。在hbase-site.xml中添加以下配置: hbase.table.sanity .

zstd、gzip、snappy等几种压缩方式时间效率对比_行思坐忆,志凌云的博客-爱代码爱编程_gzip zstd 2019-04-08 分类: hbase zstd、gzip、sn ZSTD 解压缩方式的时间与设置的非压缩的原数据大小有关 小文件:KB级别,执行1000次普通文件:kb级别,执行100次大文件:4M+,执行10次ZSTD:解压缩分配空间等于压缩后的大小 .snappy_test_tool can benchmark Snappy against a few other compression libraries (zlib, LZO, LZF, and QuickLZ), if they were detected at configure time. To benchmark using a given file, give the compression algorithm you want to test Snappy against (e.g. --zlib) and then a list of one or more file names on the command line.Exception thrown if a mutation fails sanity checks. class . org.apache.hadoop.hbase.exceptions.MasterStoppedException. Thrown when the master is stopped. class . . This exception is thrown when attempts to read an HFile fail due to corruption or truncation issues. Attempt to restore the snapshot leads to failing sanity check as expected restore_snapshot 'snapshot-t' ERROR: org.apache.hadoop.hbase.DoNotRetryIOException: coprocessor.jar Set hbase.table.sanity.checks to false at conf or table descriptor if you want to bypass sanity checks .

Discover the importance of sanity checks in software development as you master key steps and explore real-life examples. . Mathematical models and algorithms undergo sanity checks to validate their accuracy and . HBase集群配置Snappy压缩算法 一、背景介绍 在生产环境搭建了Hadoop HA集群(Hadoop2.7.7+HBase1.4.10)。根据官方文档,Snappy 压缩算法在Hadoop 1.0.2中即可使用。 What is a Sanity Test? A sanity test is often a quick check on something to see if it makes sense at a basic, surface level. . You check the pre-tax total, then the after-tax total. In your mind, you expect the tax to be .org.apache.hadoop.hbase.DoNotRetryIOException: Compression algorithm 'snappy' previously failed test,代码先锋网,一个为软件开发程序员提供代码片段和技术文章聚合的网站。

Narrow and deep: In the Software testing sanity testing is a narrow and deep method to protect the components. A Subset of Regression Testing: Subset of regression testing mainly focus on the less important unit of the application. it’s used to test application new features with the requirement that is matched or not. Unscripted: sanity testing commonly unscripted.Exception in thread "main" org.apache.hadoop.hbase.DoNotRetryIOException: org.apache.hadoop.hbase.DoNotRetryIOException: Compression algorithm 'lzo' previously failed test. Set hbase.table.sanity.checks to false at conf or table descriptor if you want to bypass sanity checks Hi, I understand to compress existing HBase table we can use below technique.. disable 'SNAPPY_TABLE' alter 'SNAPPY_TABLE',{NAME=>'cf1',COMPRESSION=>'snappy'} enable 'SNAPPY_TABLE' However did you find a way how to compress existing data, as only new data is getting compressed. Your help is much app.

hadoop

On a single core of a Core i7 processor in 64-bit mode, Snappy compresses at about 250 MB/sec or more and decompresses at about 500 MB/sec or more. Snappy is widely used inside Google, in everything from BigTable and MapReduce to our internal RPC systems. (Snappy has previously been referred to as “Zippy” in some presentations and the likes.)Zstandard and Snappy are two popular data compression libraries that offer different advantages and trade-offs. . while the dictionary mode allows you to use a pre-defined dictionary to improve the compression ratio. . if you are building a data pipeline that processes log files, you should test the data compression tools with log files . A pure python implementation of the Snappy compression algorithm. Read more in the documentation on ReadTheDocs. View the change log. Quickstart pip install py-snappy Developer Setup. If you would like to hack on py-snappy, please check out the Ethereum Development Tactical Manual for information on how we do: Testing; Pull Requests; Code . Compression Ratio : GZIP compression uses more CPU resources than Snappy or LZO, but provides a higher compression ratio. General Usage : GZip is often a good choice for cold data, which is accessed infrequently. Snappy or LZO are a better choice for hot data, which is accessed frequently.. Snappy often performs better than LZO. It is worth running .

There are many and various lossless compression algorithms [11], and the eld is well developed. Some algorithms o er high compression ratios (i.e. the size of the output data is very small when contrasted with the size of the input data) while others o er higher speed (i.e. the amount of time the algorithm takes to run is relatively low).

Define Clear Test Scenarios: Identify and define the critical scenarios to be automated.This clarity ensures that the most important areas are tested effectively. Use Modular Test Scripts: Create modular and reusable test scripts to simplify maintenance and enhance scalability.. Integrate with CI/CD: Integrate automated sanity checks into your CI/CD pipeline .

Update Compression/TestCompressionTest: LZ4, SNAPPY, LZO

How to compress existing hBase data using Snappy

Cant recommend this place. The casino is sooooooo out of date - you have to wait for a hand pay just to change slot machines. Stopped in once cause it was RIGHT THERE off I80 - a waste of time. The floors go up & down .

sanity checks compression algorithm snappy previously failed test|解决HBase中snappy出错